There are more than 200 different types of cells in an animal like a mouse or human, from bone and blood to skin, spleen, heart, hair and everything else in between.

Look more closely, and the picture becomes much more detailed. For example, there are at least 10,000 different types of nerve cells in the brain – adding up to many billions in total – each with its own particular characteristics and specialised job.

As an analogy, imagine a huge international company like a bank. There are broad groups of jobs within the organisation – managers, administrators, accountants, customer service representatives, communications specialists, receptionists, cleaners, and so on – and individual people or groups within each team may have an even more specialised role.

Simply saying that all of these people are ‘bank workers’ tells you nothing about how the company is structured or how it functions. Similarly, in order to understand how the brain works, we need to map out exactly what all these cells are like, where they are and what they’re doing.

Recent advances in technology mean that researchers can measure the patterns of gene activity in single cells taken from anywhere in the body relatively quickly and easily, generating huge datasets from thousands or even millions of cells.

That’s the easy bit. The hard part is sifting through all this information and distinguishing specific groups of cells that share the same profile, meaning that they are likely to have the same function in the body. One of the biggest problems is the fact that single cell gene activity is very noisy with lots of random fluctuations in activity levels between cells, making it hard to figure out whether any two cells are really alike or not.

One recent dataset contains gene activity profiles from about 1.3 million individual cells collected from the brain of a developing mouse foetus around three quarters of the way through pregnancy. Ten times bigger than any previous datasets, it was produced by the company 10x Genomics, who made it publicly available in the hope that someone might be able to come up with a way of analysing such a huge amount of information.

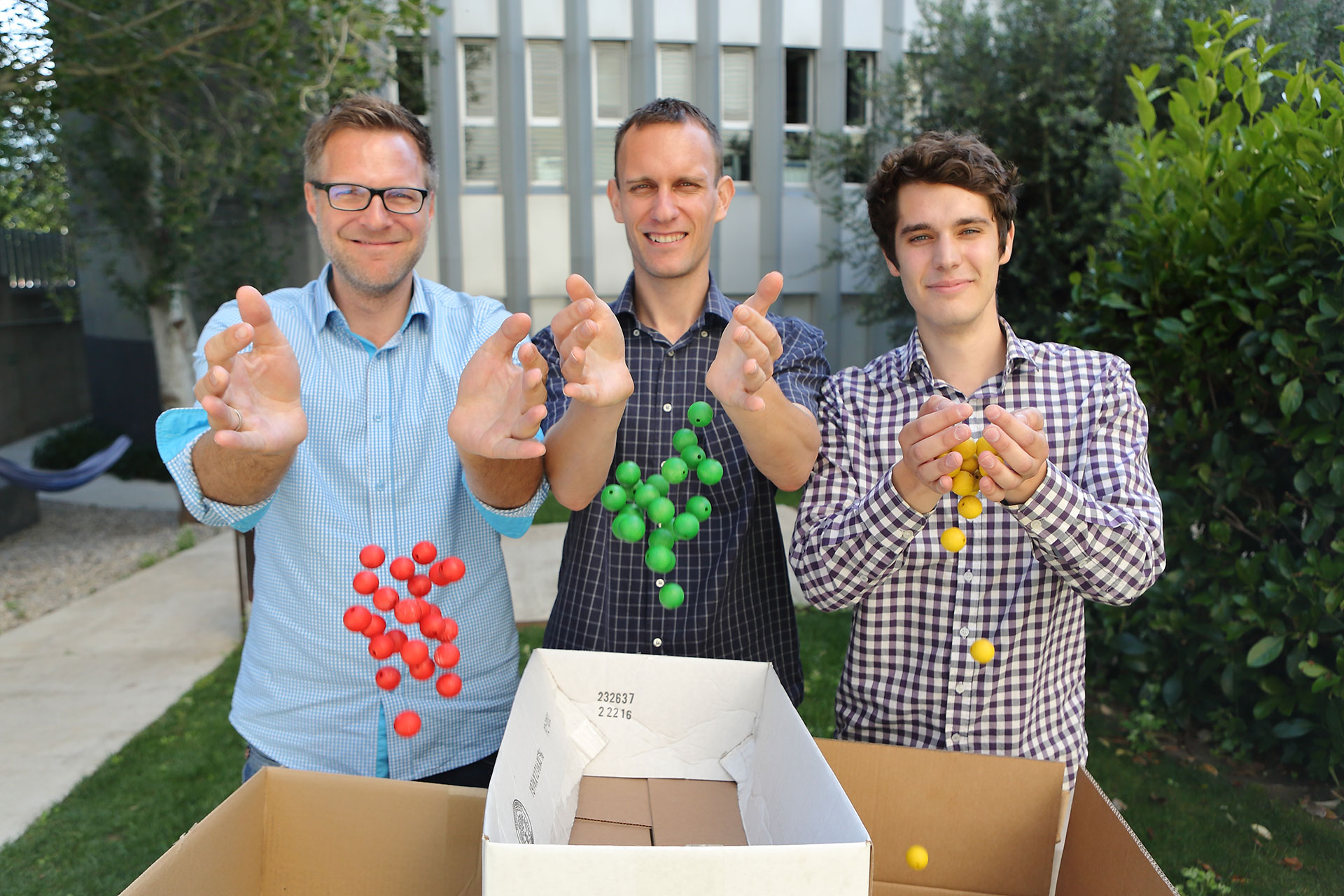

The challenge caught the attention of Holger Heyn, a team leader focusing on single cells genomics at the Centro Nacional de Análisis Genómico (CNAG-CRG), and his postdoctoral research fellow Giovanni Iacono.

“The falling cost and rising speed of single cell techniques mean that there is a tidal wave of data right now,” says Heyn. “It may be one million cells now, but it could soon be ten million or more – we wanted to build a tool to analyse these huge datasets in a way that would scale up in the future.”

Heyn and Iacono set about building a new computer tool known as bigScale, which would be capable of sifting through the data from all 1.3 million brain cells and identifying different types hidden within. One of the biggest challenges was figuring out how to handle such a large amount of biological data and load it into the computer’s working memory for processing.

To solve the problem, the researchers worked out a trick for compressing the data by grouping data from cells that all seem to be the same to create index cells, or i-cells, then loading them into the computer. It’s a bit like packing up to move house: putting every single item separately into the back of a van it would take a very long time and be chaotic and confusing, but the task becomes much more manageable if you put books in one box, plates and bowls in another and so on. And it’s much easier to unpack and sort everything out again at the other end.

“Each i-cell represents a typical cell of a particular type, which enables us to reduce the dataset from more than a million cells to around 26,000,” Heyn says. “We compress everything into i-cells and analyse the biologically relevant groups, then we can unpack it again later to see all the individual cells.”

“It was the first time I had built such an ambitious tool but every time there was a challenge – whether it was in the size of the computer memory or data storage, the steps in the analysis – we just had to take things apart, solve the problem and put it all back together,” adds Iacono.

Heyn and Iacono used this method to home in on elusive Cajal-Retzius cells, a small group of hard-to-find nerve cells named after the neuroscientists Santiago Ramón y Cajal and Gustaf Retzius, who first described them at the end of the 19th century. They exist for a short time during development, helping to control the organisation and growth of important parts of the brain, but die soon after birth.

Their rarity and brief existence make Cajal-Retzius cells hard to isolate and study, but the CNAG-CRG team discovered more than 15,000 in the whole 1.3 million cell mouse brain dataset – the largest group of these type of single cells ever analysed in this way.

“This huge dataset had just been sitting there for more than one year, full of super-interesting biological information that nobody could look at,” Iacono explains. “Now we can find rare cells with properties that have never been seen before.”

BigSCale has been quickly picked up by many researchers working on large-scale cell mapping projects, and Iacono has rewritten the software into a more user-friendly language to encourage as many people as possible to use it. One possible application is the Human Cell Atlas – an ambitious project aiming to map all the million-plus cell types in the human body, from early development in the womb through to adulthood and in diseases such as cancer.

Another opportunity is in clinical research, analysing single cells in blood or tissue samples to monitor the progression of diseases like cancer or even spot the early warning signs of illness before they become outwardly obvious.

“BigScale provides a glimpse into the future of what big data analysis can look like, and we’re hoping it will be taken up by many people working in the field,” says Heyn. “We’re excited to see how it grows, and happy to be contributing to the research community.”